How Amazon S3 Achieves Strong Consistency Without Sacrificing 9999 Availability

You are now 161,001+ subscribers strong. Let’s try to reach 162k subscribers by 30 July. Share this post & I'll send you some rewards for the referrals. How Amazon S3 Achieves Strong Consistency Without Sacrificing 99.99% Availability 🌟#79: Break Into Amazon S3 Architecture (4 Minutes)Get my system design playbook for FREE on newsletter signup: This post outlines how AWS S3 achieves strong consistency. You will find references at the bottom of this page if you want to go deeper.

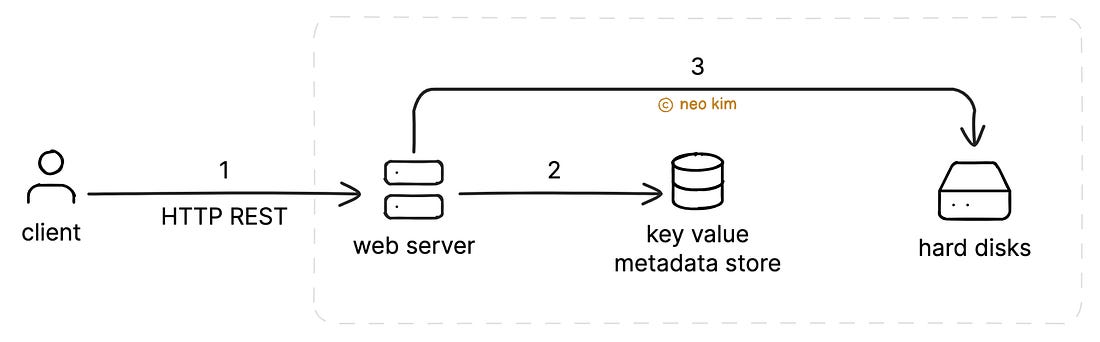

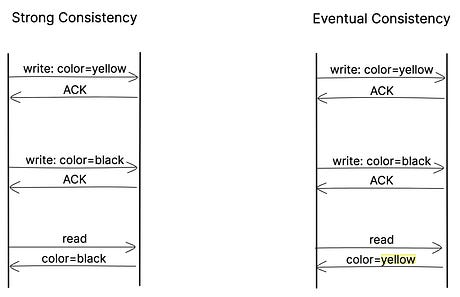

Note: This post is based on my research and may differ from actual implementation. Once upon a time, there was a data processing startup. They convert raw data into a structured format. Yet they had only a few customers. So an on-premise server was enough. But one morning, they got a new customer with an extremely popular site. This means massive data storage needs. Yet their storage server had only limited capacity. So they moved to Amazon Simple Storage Service (S3), an object storage. It stores unstructured data without hierarchy. S3 provides a REST API via the web server. And stores metadata and file content separately for scale. It stores metadata of data objects in a key-value database. Also it caches the metadata for low latency and high availability. Although caching metadata offers performance, some requests might return an older version of metadata. Because there could be network partitions in a distributed architecture. This means writes go to one cache partition, while reads go to another cache partition. Thus causing eventual consistency. But they need strong consistency in data processing for ordering and correctness. While setting up extra app logic for strong consistency increases infrastructure complexity. Onward. CodeRabbit: Free AI Code Reviews in VS Code - SponsorCodeRabbit brings real-time, AI-powered code reviews straight into VS Code, Cursor, and Windsurf. It lets you:

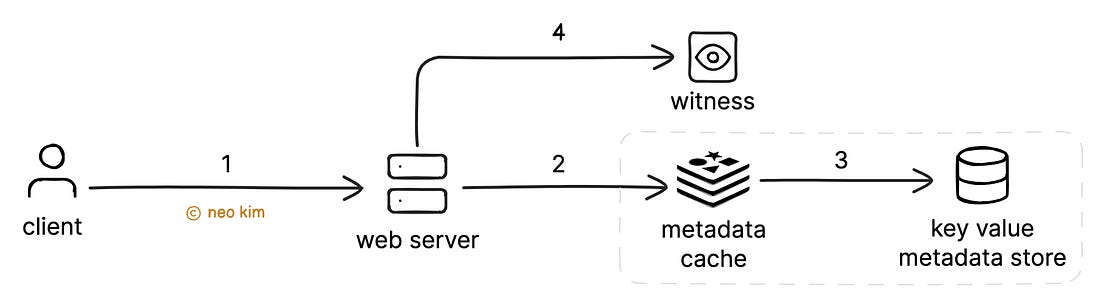

S3 Strong ConsistencyIt’s difficult to achieve strong consistency at scale without performance or availability tradeoffs. So smart engineers at Amazon used simple ideas to solve this hard problem. Here’s how: 1. Write PathThey update the metadata cache using the write-through pattern. It means the write coordinator updates the cache first. Then updates the metadata store synchronously. Thus reducing the risk of a stale cache. Also they set up a separate service to track the cache freshness and called it Witness. It stores only the latest version of a data object and keeps it lightweight (in-memory) for low latency. While the write coordinator notifies the witness whenever there’s a metadata update. Besides they introduced a transaction log in the metadata store. It tracks the order of operations and allows them to check if the cache is fresh. Ready for the best part? 2. Read PathHere’s the read request workflow:

Thus achieving strong consistency. Put simply, they find out if the metadata cache is fresh using the witness. Think of the witness as a central observer of metadata changes and a checkpoint for reads. Also they assume the cache to be stale if the server cannot reach the witness. If so, they fetch the data directly from the metadata store. Yet the witness shouldn’t affect S3’s overall performance or 99.99% availability. So they scale the witness servers horizontally. And set up automation to replace failed servers quickly. Besides they redistribute the traffic when a witness server fails. S3 supports 100 trillion data objects at 10 million requests per second. It offers strong read-after-write consistency using the witness and a consistent metadata cache. Thus making the app logic simpler. Subscribe to get simplified case studies delivered straight to your inbox: Want to advertise in this newsletter? 📰 If your company wants to reach a 160K+ tech audience, advertise with me. Neo’s recommendation 🚀 Meet the updated Cerbos Hub: an authorization solution that scales with your product. Manage unlimited tenants, policies, and roles. Enforce contextual & continuous authorization across apps, APIs, AI agents, MCPs, and workloads. Try it for free. Thank you for supporting this newsletter. You are now 161,001+ readers strong, very close to 162k. Let’s try to get 162k readers by 30 July. Consider sharing this post with your friends and get rewards. Y’all are the best. TL;DR 🕰️ You can find a summary of this article here. Consider a repost if you find it helpful. ReferencesShare this post & I'll send you some rewards for the referrals. |