My 25 Year Old Laptop Can Write Space Invaders In Javascript Now Using Glm 45 Air And Mlx

My 2.5 year old laptop can write Space Invaders in JavaScript now, using GLM-4.5 Air and MLXPlus the system prompt behind the new ChatGPT study modeIn this newsletter:

Plus 7 links and 2 quotations My 2.5 year old laptop can write Space Invaders in JavaScript now, using GLM-4.5 Air and MLX - 2025-07-29I wrote about the new GLM-4.5 model family yesterday - new open weight (MIT licensed) models from Z.ai in China which their benchmarks claim score highly in coding even against models such as Claude Sonnet 4. The models are pretty big - the smaller GLM-4.5 Air model is still 106 billion total parameters, which is 205.78GB on Hugging Face. Ivan Fioravanti built this 44GB 3bit quantized version for MLX, specifically sized so people with 64GB machines could have a chance of running it. I tried it out... and it works extremely well. I fed it the following prompt:

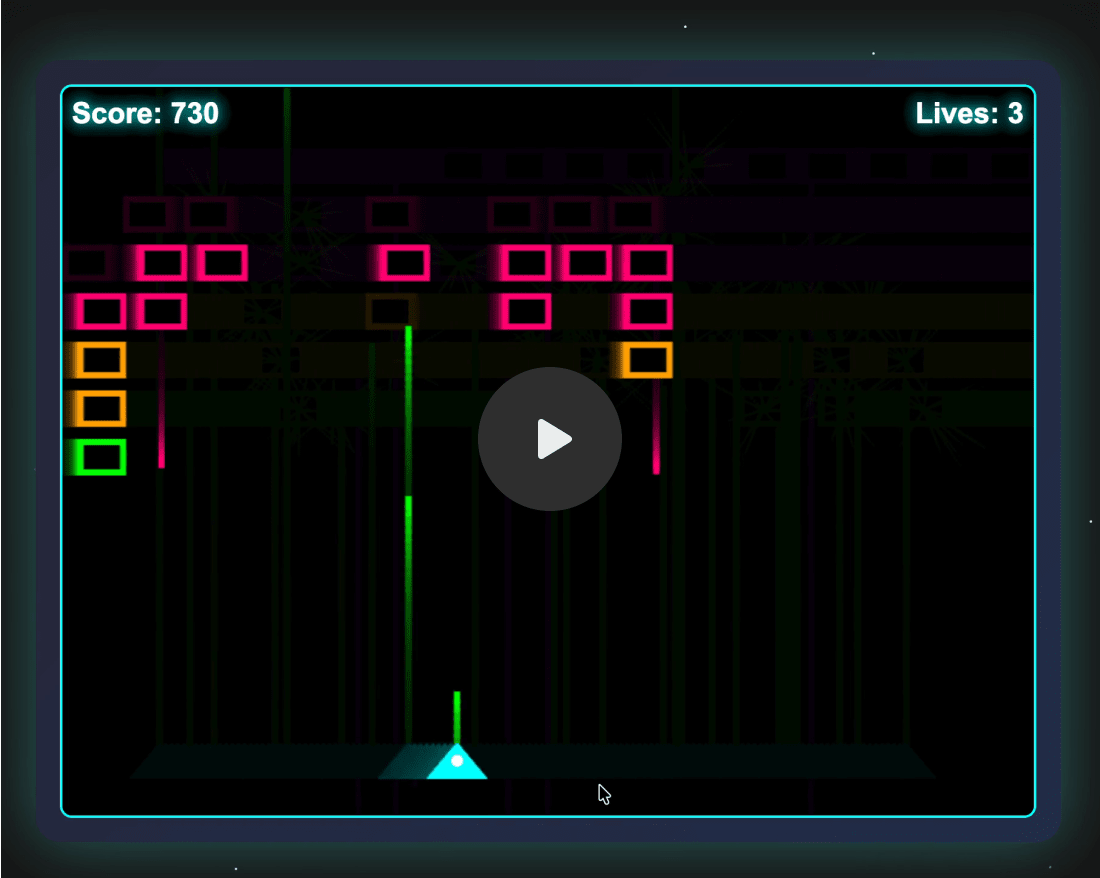

And it churned away for a while and produced the following: Clearly this isn't a particularly novel example, but I still think it's noteworthy that a model running on my 2.5 year old laptop (a 64GB MacBook Pro M2) is able to produce code like this - especially code that worked first time with no further edits needed. How I ran the modelI had to run it using the current Then in that Python interpreter I used the standard recipe for running MLX models: That downloaded 44GB of model weights to my Then: The response started like this:

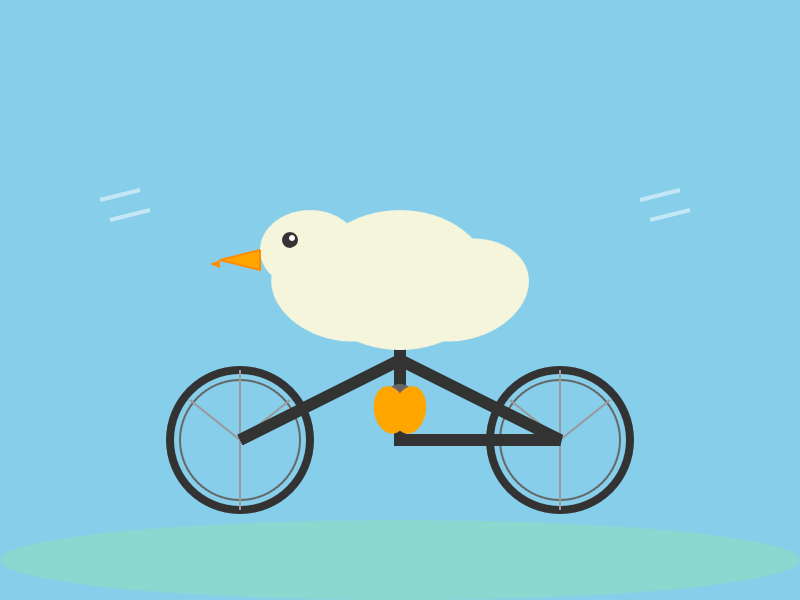

Followed by the HTML and this debugging output: You can see the full transcript here, or view the source on GitHub, or try it out in your browser. A pelican for good measureI ran my pelican benchmark against the full sized models yesterday, but I couldn't resist trying it against this smaller 3bit model. Here's what I got for Here's the transcript for that. In both cases the model used around 48GB of RAM at peak, leaving me with just 16GB for everything else - I had to quit quite a few apps in order to get the model to run but the speed was pretty good once it got going. Local coding models are really good nowIt's interesting how almost every model released in 2025 has specifically targeting coding. That focus has clearly been paying off: these coding models are getting really good now. Two years ago when I first tried LLaMA I never dreamed that the same laptop I was using then would one day be able to run models with capabilities as strong as what I'm seeing from GLM 4.5 Air - and Mistral 3.2 Small, and Gemma 3, and Qwen 3, and a host of other high quality models that have emerged over the past six months. Link 2025-07-26 Official statement from Tea on their data leak: Tea is a dating safety app for women that lets them share notes about potential dates. The other day it was subject to a truly egregious data leak caused by a legacy unprotected Firebase cloud storage bucket:

Storing and then failing to secure photos of driving licenses is an incredible breach of trust. Many of those photos included EXIF location information too, so there are maps of Tea users floating around the darker corners of the web now. I've seen a bunch of commentary using this incident as an example of the dangers of vibe coding. I'm confident vibe coding was not to blame in this particular case, even while I share the larger concern of irresponsible vibe coding leading to more incidents of this nature. The announcement from Tea makes it clear that the underlying issue relates to code written prior to February 2024, long before vibe coding was close to viable for building systems of this nature:

Also worth noting is that they stopped requesting photos of ID back in 2023:

Update 28th July: A second breach has been confirmed by 404 Media, this time exposing more than one million direct messages dated up to this week. Link 2025-07-27 Enough AI copilots! We need AI HUDs: Geoffrey Litt compares Copilots - AI assistants that you engage in dialog with and work with you to complete a task - with HUDs, Head-Up Displays, which enhance your working environment in less intrusive ways. He uses spellcheck as an obvious example, providing underlines for incorrectly spelt words, and then suggests his AI-implemented custom debugging UI as a more ambitious implementation of that pattern. Plenty of people have expressed interest in LLM-backed interfaces that go beyond chat or editor autocomplete. I think HUDs offer a really interesting way to frame one approach to that design challenge. Link 2025-07-27 TIL: Exception.add_note: Neat tip from Danny Roy Greenfeld: Python 3.11 added a Here's PEP 678 – Enriching Exceptions with Notesby Zac Hatfield-Dodds proposing the new feature back in 2021. Link 2025-07-27 The many, many, many JavaScript runtimes of the last decade: Extraordinary piece of writing by Jamie Birch who spent over a year putting together this comprehensive reference to JavaScript runtimes. It covers everything from Node.js, Deno, Electron, AWS Lambda, Cloudflare Workers and Bun all the way to much smaller projects idea like dukluv and txiki.js. Link 2025-07-28 GLM-4.5: Reasoning, Coding, and Agentic Abililties: Another day, another significant new open weight model release from a Chinese frontier AI lab. This time it's Z.ai - who rebranded (at least in English) from Zhipu AI a few months ago. They just dropped GLM-4.5-Base, GLM-4.5 and GLM-4.5 Air on Hugging Face, all under an MIT license. These are MoE hybrid reasoning models with thinking and non-thinking modes, similar to Qwen 3. GLM-4.5 is 355 billion total parameters with 32 billion active, GLM-4.5-Air is 106 billion total parameters and 12 billion active. They started using MIT a few months ago for their GLM-4-0414 models - their older releases used a janky non-open-source custom license. Z.ai's own benchmarking (across 12 common benchmarks) ranked their GLM-4.5 3rd behind o3 and Grok-4 and just ahead of Claude Opus 4. They ranked GLM-4.5 Air 6th place just ahead of Claude 4 Sonnet. I haven't seen any independent benchmarks yet. The other models they included in their own benchmarks were o4-mini (high), Gemini 2.5 Pro, Qwen3-235B-Thinking-2507, DeepSeek-R1-0528, Kimi K2, GPT-4.1, DeepSeek-V3-0324. Notably absent: any of Meta's Llama models, or any of Mistral's. Did they deliberately only compare themselves to open weight models from other Chinese AI labs? Both models have a 128,000 context length and are trained for tool calling, which honestly feels like table stakes for any model released in 2025 at this point. It's interesting to see them use Claude Code to run their own coding benchmarks:

They published the dataset for that benchmark as zai-org/CC-Bench-trajectories on Hugging Face. I think they're using the word "trajectory" for what I would call a chat transcript.

They pre-trained on 15 trillion tokens, then an additional 7 trillion for code and reasoning:

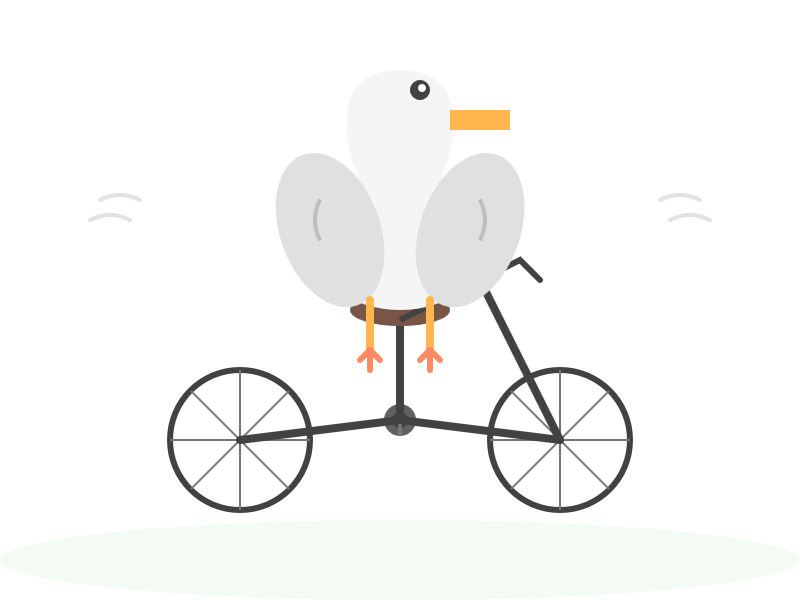

They also open sourced their post-training reinforcement learning harness, which they've called slime. That's available at THUDM/slime on GitHub - THUDM is the Knowledge Engineer Group @ Tsinghua University, the University from which Zhipu AI spun out as an independent company. This time I ran my pelican bechmark using the chat.z.ai chat interface, which offers free access (no account required) to both GLM 4.5 and GLM 4.5 Air. I had reasoning enabled for both. Here's what I got for "Generate an SVG of a pelican riding a bicycle" on GLM 4.5. I like how the pelican has its wings on the handlebars: And GLM 4.5 Air: Ivan Fioravanti shared a video of the mlx-community/GLM-4.5-Air-4bit quantized model running on a M4 Mac with 128GB of RAM, and it looks like a very strong contender for a local model that can write useful code. The cheapest 128GB Mac Studio costs around $3,500 right now, so genuinely great open weight coding models are creeping closer to being affordable on consumer machines. Update: Ivan released a 3 bit quantized version of GLM-4.5 Air which runs using 48GB of RAM on my laptop. I tried it and was really impressed, see My 2.5 year old laptop can write Space Invaders in JavaScript now. Quote 2025-07-28

Quote 2025-07-29

Link 2025-07-29 Qwen/Qwen3-30B-A3B-Instruct-2507: New model update from Qwen, improving on their previous Qwen3-30B-A3B release from late April. In their tweet they said:

I tried the chat.qwen.ai hosted model with "Generate an SVG of a pelican riding a bicycle" and got this: I particularly enjoyed this detail from the SVG source code: I went looking for quantized versions that could fit on my Mac and found lmstudio-community/Qwen3-30B-A3B-Instruct-2507-MLX-8bit from LM Studio. Getting that up and running was a 32.46GB download and it appears to use just over 30GB of RAM. The pelican I got from that one wasn't as good: I then tried that local model on the "Write an HTML and JavaScript page implementing space invaders" task that I ran against GLM-4.5 Air. The output looked promising, in particular it seemed to be putting more effort into the design of the invaders (GLM-4.5 Air just used rectangles): But the resulting code didn't actually work: That same prompt against the unquantized Qwen-hosted model produced a different resultwhich sadly also resulted in an unplayable game - this time because everything moved too fast. This new Qwen model is a non-reasoning model, whereas GLM-4.5 and GLM-4.5 Air are both reasoners. It looks like at this scale the "reasoning" may make a material difference in terms of getting code that works out of the box. Link 2025-07-29 OpenAI: Introducing study mode: New ChatGPT feature, which can be triggered by typing

Thankfully OpenAI mostly don't seem to try to prevent their system prompts from being revealed these days. I tried a few approaches and got back the same result from each one so I think I've got the real prompt - here's a shared transcript (and Gist copy) using the following:

It's not very long. Here's an illustrative extract:

I'm still fascinated by how much leverage AI labs like OpenAI and Anthropic get just from careful application of system prompts - in this case using them to create an entirely new feature of the platform. Simon Willison’s Newsletter is free today. But if you enjoyed this post, you can tell Simon Willison’s Newsletter that their writing is valuable by pledging a future subscription. You won't be charged unless they enable payments. |